Federico Badaloni

Design Notes

On the Information Architecture of Music

Abstract

The article frames music through the lens of information architecture in order to infer a few considerations on information architecture through the lens of music, and is a thoroughly revised and expanded version of the author’s opening keynote at World Information Architecture Day in Verona, Italy, February 18 2017.

Cultural Experiences vs User Experiences

Even though designers often do not explicitly address the point when reflecting on their process, design work starts by designing what is “behind the design” (Hobbs 2014). This “design of the abstract”, typical of contemporary information architecture approaches (Resmini 2013), is necessary to establish boundaries, limitations, rules, and to choose the conceptual tools that better fit the task of structuring the relationships between objects in time and space so that they shape a compelling, meaningful, and actionable system. This is an oddly overlooked but foundational point, since “(t)he entire hypothesis of experience design (...) is that the ephemeral and insubstantial can be designed. And that there is a kind of design that can be practiced independent of medium and across media” (Garrett 2009).

Something “ephemeral” lasts for a very short time; something “insubstantial” either lacks solidity or has no physical existence.. So what is ephemeral design for? What value does it provide? Garrett himself answers these questions in his closing plenary for the ASIS&T 2016 Information Architecture Summit in Atlanta, Georgia, later revised and published as “The seven sisters” (Garrett 2016) in May of that year:

Use means that the value of the experience is not in the experience itself; it’s in what the experience helps us to accomplish. When we use something, the experience we have using it is not actually the reason why we engage with it. The value of the experience is extrinsic to the experience. But these experiences with extrinsic value — user experiences — are not the only kinds of experiences humans create. Many of our experiences have intrinsic value, that is to say that the value of the experience is having the experience. This is the experience of art, of music, of a million forms of human creative endeavor. These are cultural experiences that have no value or purpose beyond their own existence (Garrett 2016).

When we talk about design, we often focus on the techniques that we need to adopt in different situations, rarely on the mental effort that different kinds of design entail. But understanding where and why we struggle while designing is quite an excellent starting point to find and refine our tools and methods. In regard to this, Garrett says that

cultural experiences are no less designed than user experiences even though their creators may not identify as designers. In fact, you could argue that cultural experiences are more designed, because the primacy of the task in user experiences is replaced by the primacy of the experience itself. The whole creation of the experience is an exercise in conscious intent, which can slip away when your only success criterion is utility (Garrett 2016)

Ennio Morricone, the world-famous Italian composer, drew an important distinction between what he called “musica applicata” (Lucci 2007), whose literal translation would be “applied music” and is more properly rendered as “music created to be put to practical use”, and “absolute music”, “music that is free from bonds”. The aim of “musica applicata” is to serve a third-part goal, for example a movie scene, while the aim of absolute music is the musical experience in and of itself. Morricone told an interesting anecdote about the different efforts the two types of music require:

I betrayed absolute music, the one my mentor Goffredo Petrassi taught me, and this was a true suffering to me. One day I met him along via Frattina [in Rome] and he said: ‘I’m sure you will make up for the time you lost’. It was fantastic for me. And then I really recovered a little of this time because writing a movie soundtrack required one month or two weeks to me, sometimes just one week. But composing absolute music required much more time, even six month for a single work (Bendia 2016).

Morricone’s story supports Garrett’s argument that designing a cultural experience, such as those of “art, of music, of a million forms of human creative endeavor” that have “no value or purpose beyond their own existence” (Garrett 2016), requires “more design” as Garrett puts it, since they require the creators, who “may not identify as designers”, to focus on the experience itself, its intrinsic value, rather than on the task it accomplishes, its extrinsic value. Therein also lies a crucial question: “is there some discrete set of principles and practices—Garrett asks—that can be applied to both kinds of experiences? What would it take to define such a set?” (Garrett 2016). The application of composition and musical theory to information architecture can provide a preliminary answer.

Relationships as Information Architecture

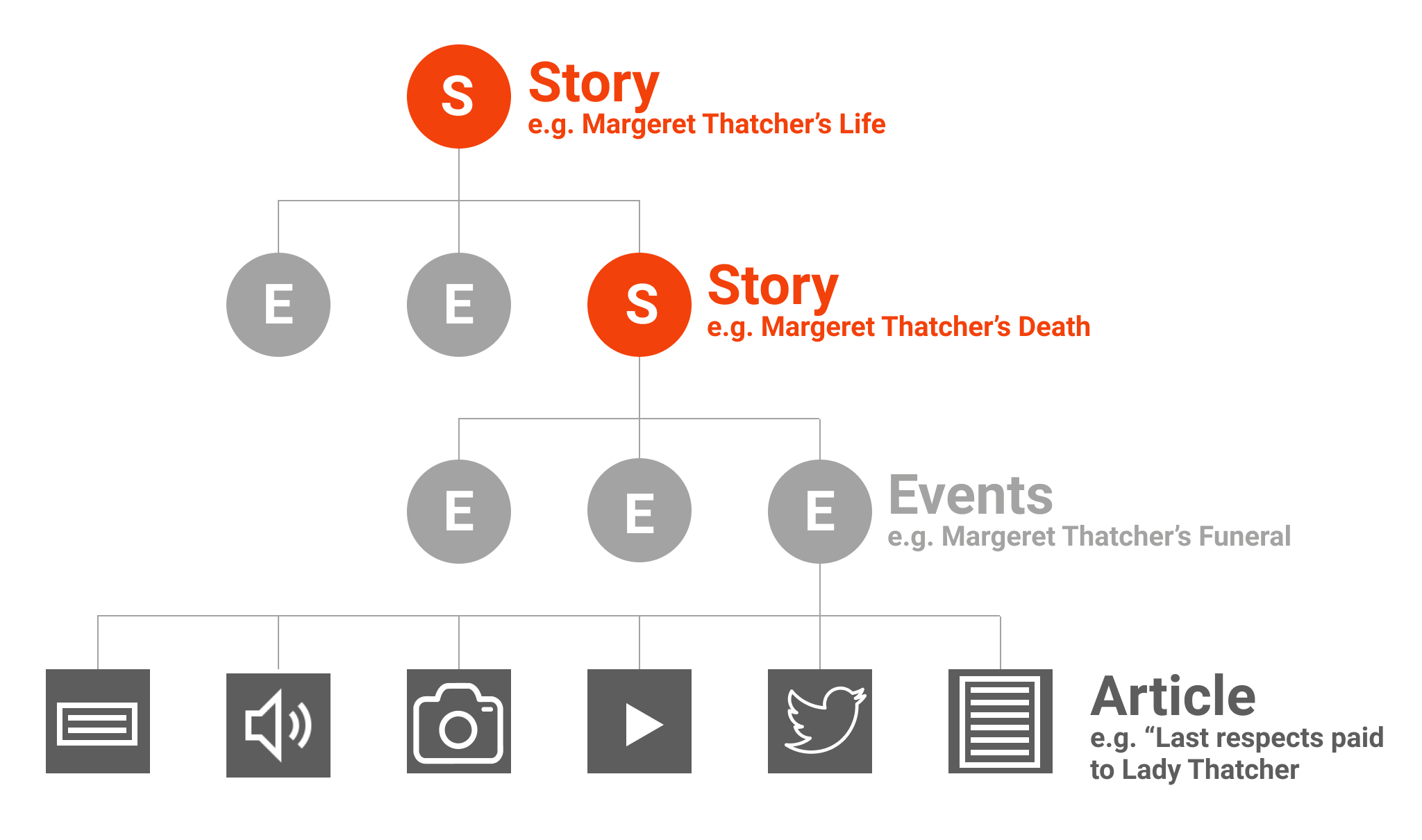

“Effective information architects make the complex clear” (Wurman 2001, p. 23). To make the complex clear, to make multiple pieces of information understandable, designers have to establish and design relationships among these pieces (Resmini 2012). This system of meaningful relationships also needs to consider those relationships that will be established by the people who will later interact with that information. The starting point of such a system is the creation of the structures and syntax that will determine which relationships are meaningful and which are not: in information architecture terms, its ontology, its taxonomy, its vocabulary. The image in Fig. 1 represents the simplified schema of the relationships between entities belonging to the BBC’s information architecture in 2013.

Figure1. BBC, conceptual representation of the storyline model (Penbrooke 2013. Graphics: W. Henke)

At the base of the pyramid there are “Articles”, “informational atoms” representing the individual videos, audio files, photographs, snippets of text, or tweets. They relate to one another and form a hierarchy built of larger “informational molecules”, called “Events” and of macromolecules called “Stories”. This basic architecture, which the BBC has been constantly adapting, refining and improving through the years, makes correlating, suggesting, and syndicating content a simpler to manage and algorithm-ready task (Penbrooke 2013). Above all, it turns individual data points into information, providing structures of meaning.

Establishing and organising relationships like these from the BBC example is the essence of information architecture and the core of all the creative activities, including music composition. Indeed, even when the “informational atoms” are pure sound, the necessary condition for a piece of music to be understandable is the existence of an underlying logic in the relationships between the individual atoms. It is in this sense that the various existing relationships between the base musical elements described in music theory can be understood to be the specific information architecture of music.

Tonality as Information Architecture

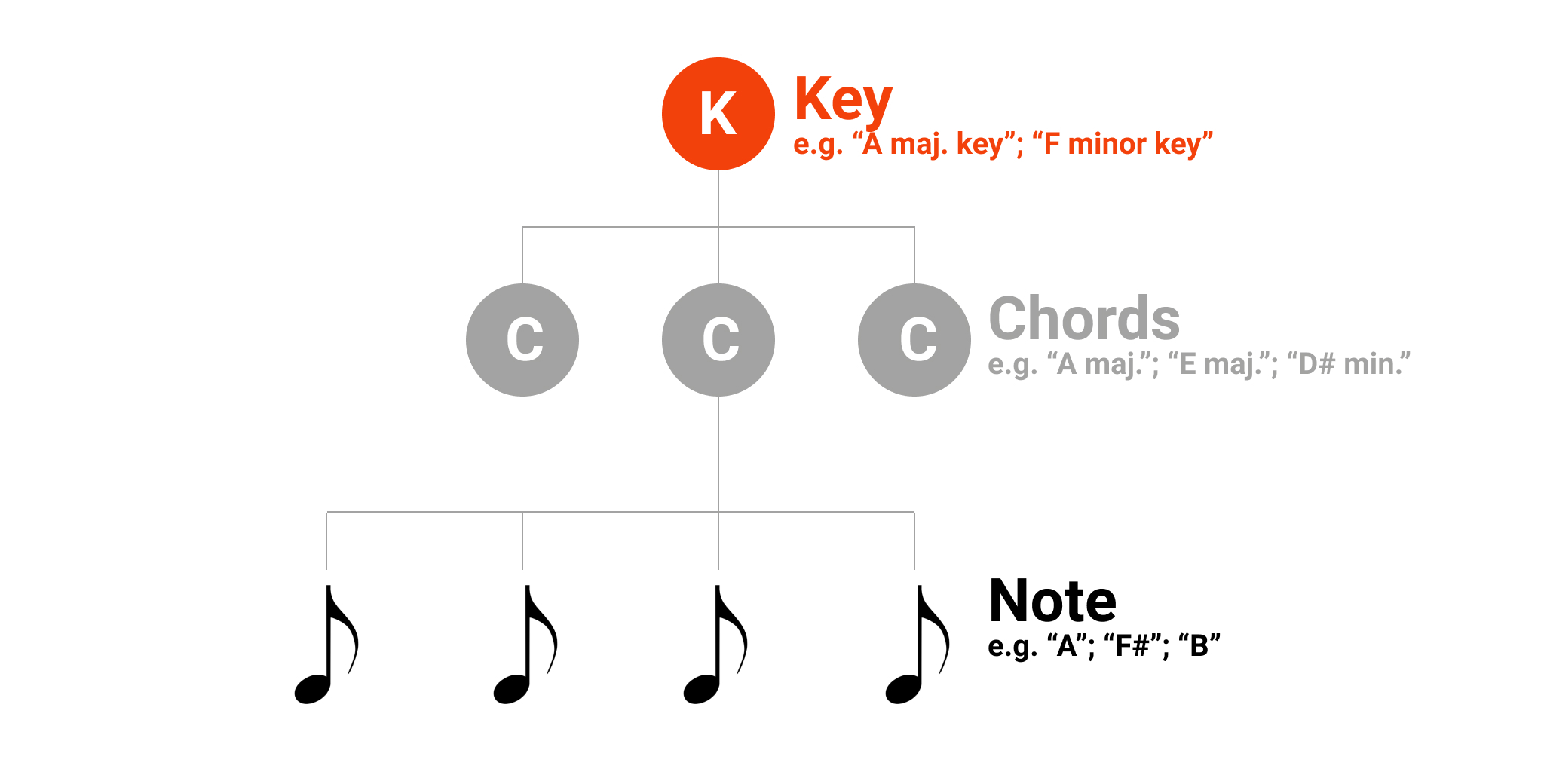

Historically, a number of different musical information architectures have followed one another over the centuries. The more familiar to our ears is called tonality. Tonality dates back to the early 17th century and was the primary musical information architecture in Western music until the early years of the 20th century, when contemporary classical music departed from it. Tonality is still in use in popular music, and in most “musica applicata”. If we follow the atom, molecule, and macromolecule model, its information architecture can be described as such:

- The informational atom is a note, a sound with a fixed pitch.

- The informational molecule is called a chord, a set of notes that are heard as if sounding simultaneously.

- The informational macromolecule is called a key, a set made by a group of notes and the chords that result from these notes.

When we listen to a piece of music, we tend to associate a particular meaning to each chord by analyzing the relationships that it has with the other chords, with varying degrees of precision depending on our naive or professional understanding of music. For example, think of how some chord sequences suggest a sense of rest whereas others suggest tension, an anticipation for relaxation or release. Or how chords may be said to have some form of directionality or implicit “follow-up”, so that listeners expect certain chords to be followed by other specific chords.

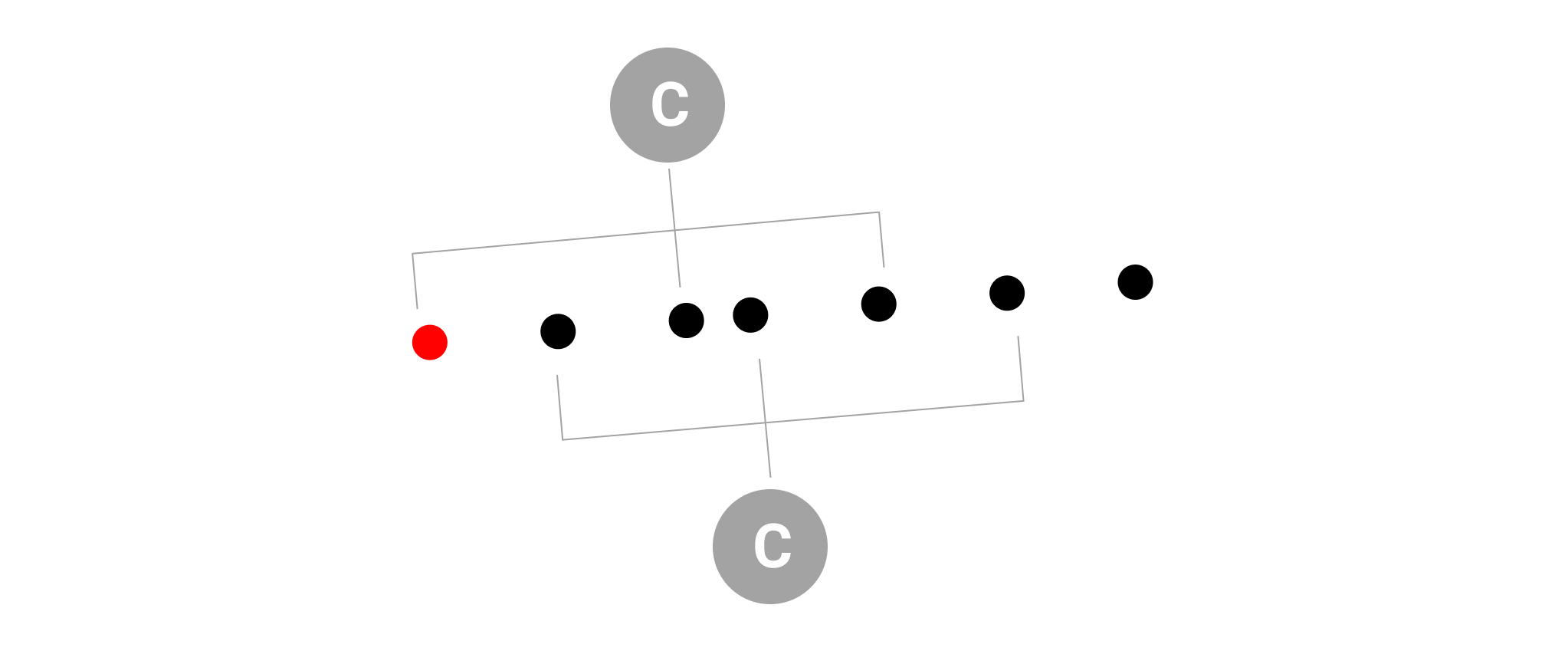

Figure2. Tonal music information architecture (Graphics: W. Henke)

In music theory, the study and the art of combining the different meanings of chords is called harmony. From an information architecture perspective, this sentence can be rephrased as “harmony is the art of producing meanings when designing a particular acoustic experience”. By extension, information architecture itself can be understood as aiming at creating a specific form of harmony and be practiced as the art and craft of designing the structures that support sense-making and meaning-making in a particular environment.

Notes and Chords

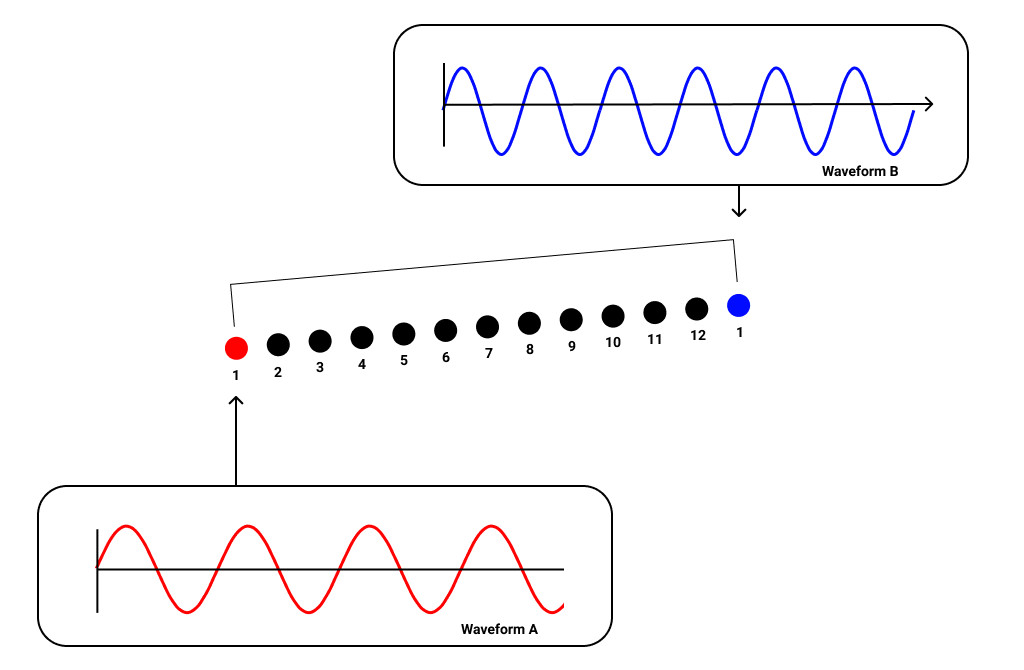

Tonality in Western music applies to an acoustic environment made by sets of twelve notes. These notes are obtained by dividing in twelve equal parts the range of frequencies between an initial given sound and the sound that produces a frequency that is double the frequency of the starting one. For most people raised in the Western tradition, this 1/12 frequency shift is the minimal audible difference between two notes and it is called a semitone. A shift amounting to two semitones is called a tone.

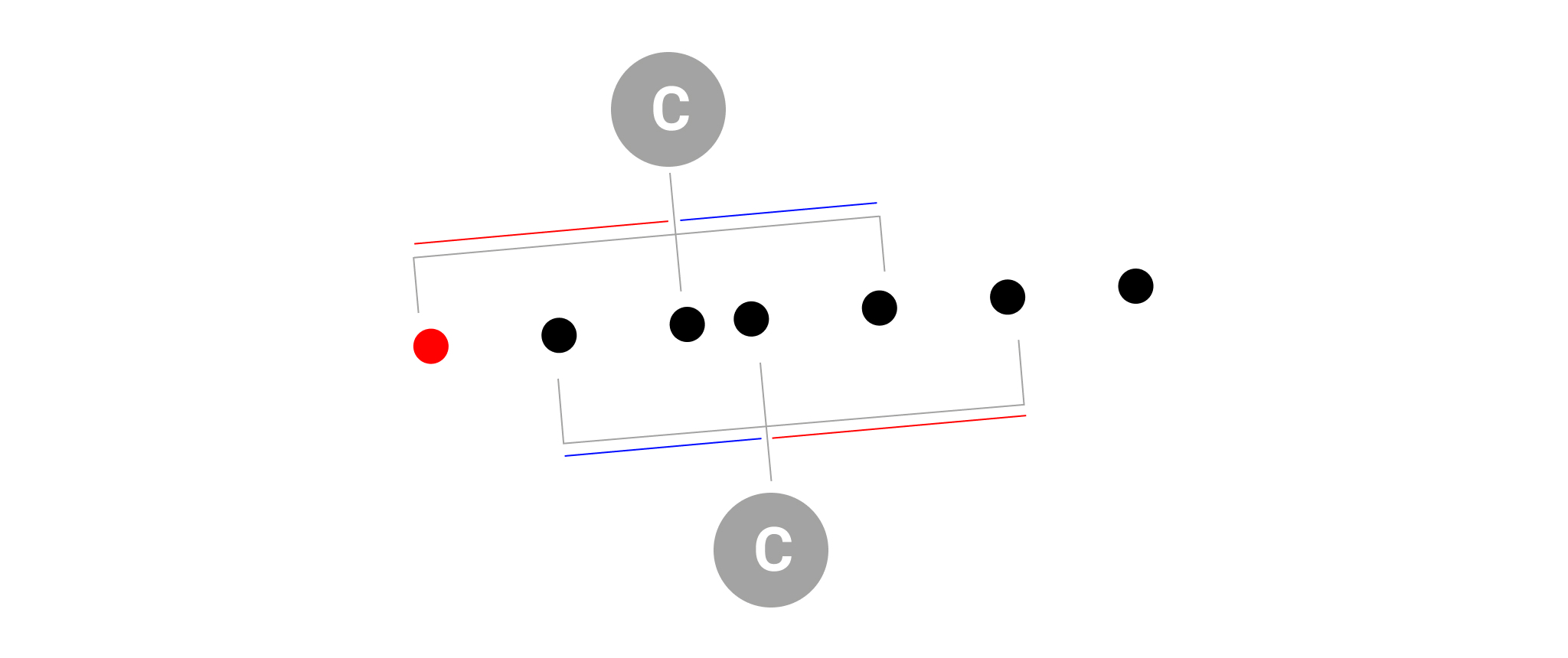

Figure 3. The twelve notes obtained by dividing in equal parts the range between a given frequency and its double (Graphics: W. Henke)

Our ears consider the multiples of each frequency as very similar tones. For that reason we give the same name to the notes that correspond to those multiples. For example, in English, A is the note that corresponds to a frequency of 440 Hertz, and A is also the name of the note that corresponds to a frequency of 880 Hertz (fig. 3). As a result, we experience the whole range of audible frequencies as if divided in several twelve note patterns.

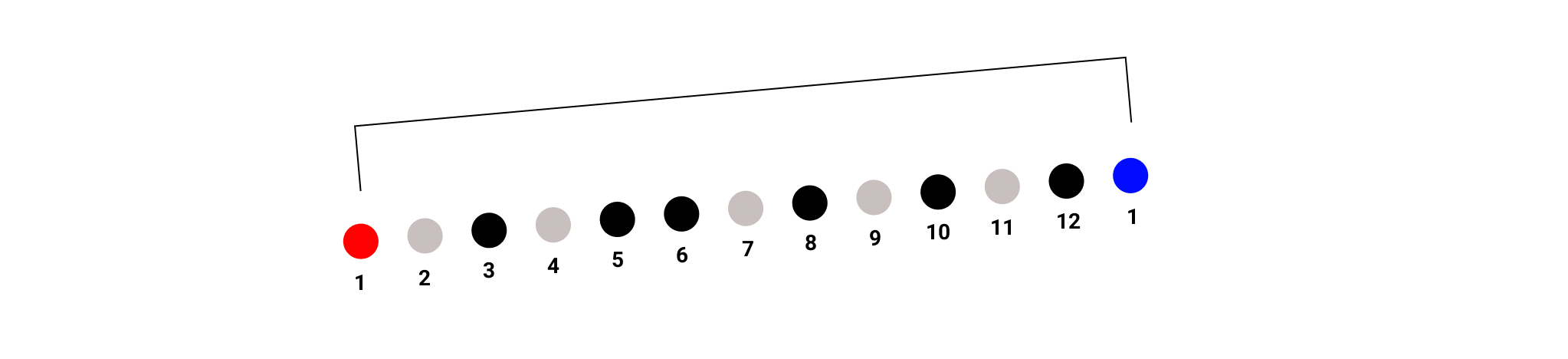

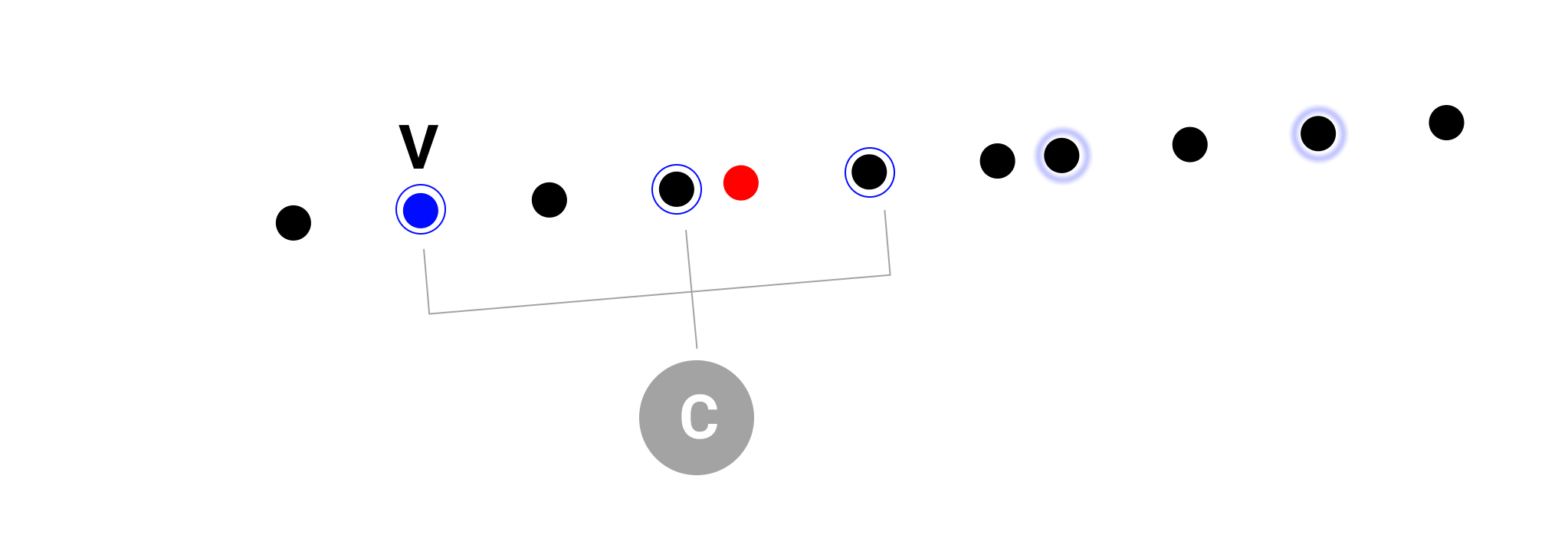

Figure 4. The major scale notes (red and black dots) (Graphics: W. Henke)

Writing a piece of tonal music, the first thing to do is to choose only seven notes from the set of twelve. The set of notes being chosen constitutes a scale. In Fig. 4, the notes 1, 3, 5, 6, 8, 10, 12 are chosen: this is called a “major scale”. If tone 4 were chosen instead of tone 5 the resulting scale would be one of the “minor scales”, where “major” and “minor” refer to the distance between the first and the third note of the scale.

If we play different notes from the same scale simultaneously, we instead have a chord. Basic chords are produced playing three alternate notes, as shown in the image below:

Figure 5. The chords (C) produced on the first and on the second note of a major scale (Graphics: W. Henke)

Depending on what notes are part of the scale, some chords cover a greater tonal distance between the first note (the lower tone and the “root”) and the second one than between their second note and the third one. Other chords present the opposite sequence (fig. 6).

Figure 6. Tonal distances between notes in different types of chords (Graphics: W. Henke)

The first kind of chord is called a “major” chord, the second one is called a “minor” chord. In tonal music, there are only three basic types of chords: major, minor and diminished. In a major scale, a diminished chord is produced on the seventh tone, in which the distances between the notes are shorter (minor). One (7th), two (th), or three (13th) notes can be added to any chord, but this does only change the strength of its internal tension, not its “meaning”. Similarly, chords can be reversed by altering the sequence of its notes but not the notes themselves: this changes its sense of stability but not, again, its “meaning”.

We usually associate a sense of perfection and rest to the major chords and we consider the meaning of minor chords as more sad and unstable. In tonality, the first note of a scale (in red) and its chord hold a meaning as a starting and ending point, a sort of center of gravity for that acoustic environment. This center of gravity is the place where all the tensions resolve.

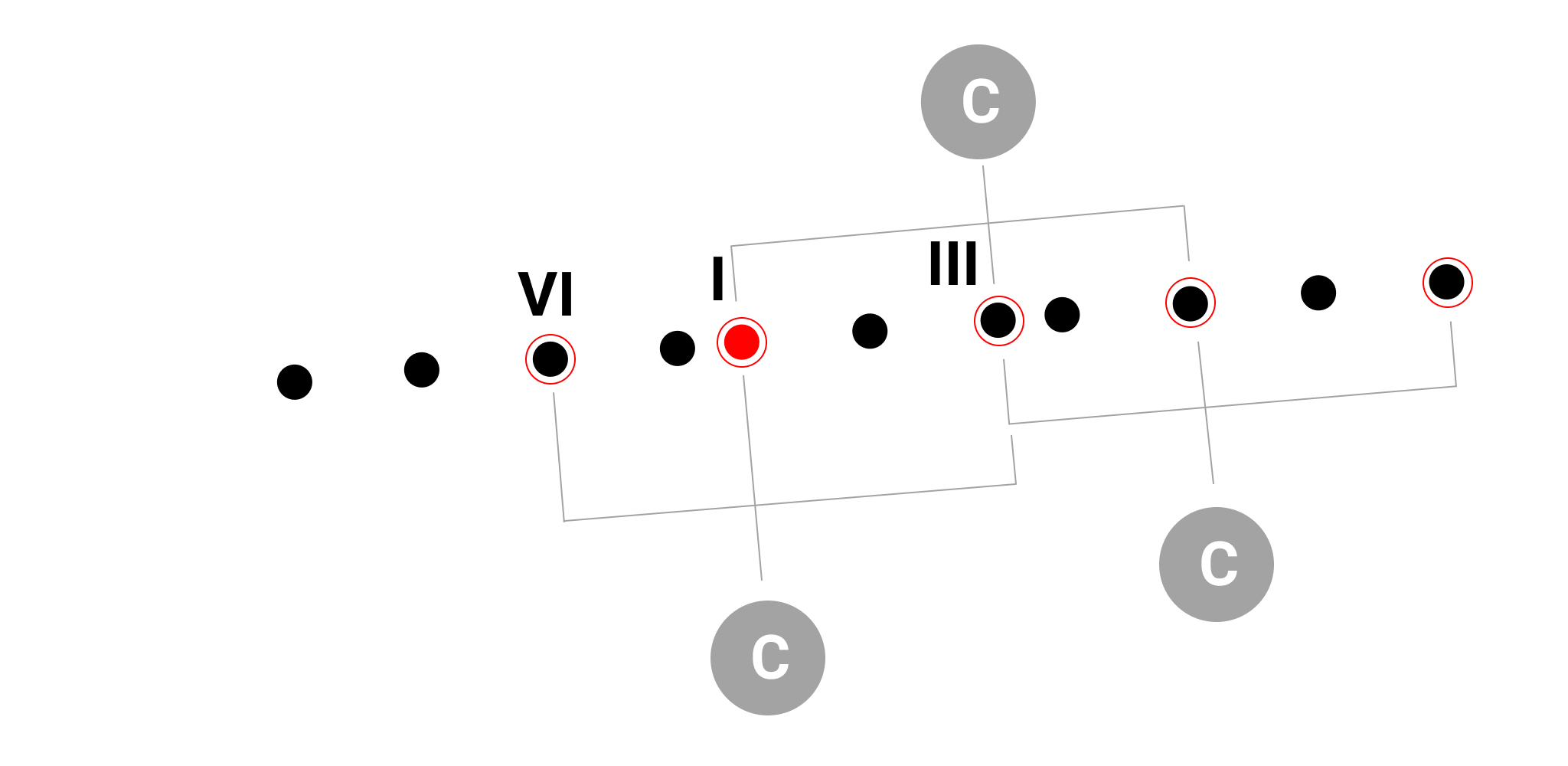

We tend to give the same meaning and function to the chords that are produced on the third and on the sixth note of the scale, because they have two notes in common with the first chord.

Figure 7. Chords generated on the VI, I and III note of a major scale (Graphics: W. Henke)

Figure 8. Chords generated on the fifth note of a major scale and the other notes of the same polarity (dotted) (Graphics: W. Henke)

We consider the chords that are produced on the other notes as belonging to an opposite polarity of meanings. The one that originates from the fifth note of a scale is the center of this polarity. This chord expresses as much a sense of tension as the one on the first note gives us the sense of resolution.

Generally speaking, composers produce variety and a sense of movement in a piece of music by alternating chords generated using notes from the first and the second axes.

Graphs and Musical Representation

A graph is a mathematical model for describing a set of objects and the relationships among them. The objects in the graph are represented by vertices (or nodes) and each relationship between vertices by an edge (line or arc). Typically, a graph is depicted in diagrammatic form as a set of circles or dots for the vertices joined by lines or curves for the edges (fig. 9).

Figure 9. A graph (Graphics: W. Henke)

Social networks are often described as graphs, called sociograms, whose vertices represent people and edges describe the relationships between them (Moreno 1934). Semantic systems are often described as graphs as well, with vertices representing concepts and edges relationships, as are hypertextual systems such as websites or mobile apps; here the vertices usually represent individual pages or screens and the edges represent the links between them.

Graphs have a long standing tradition in information architecture practice. Diagrammatic representations of structure such as sitemaps, blueprints, and even customer journeys have made use, to various extents, of graph or graph-derived logic and visual conventions.

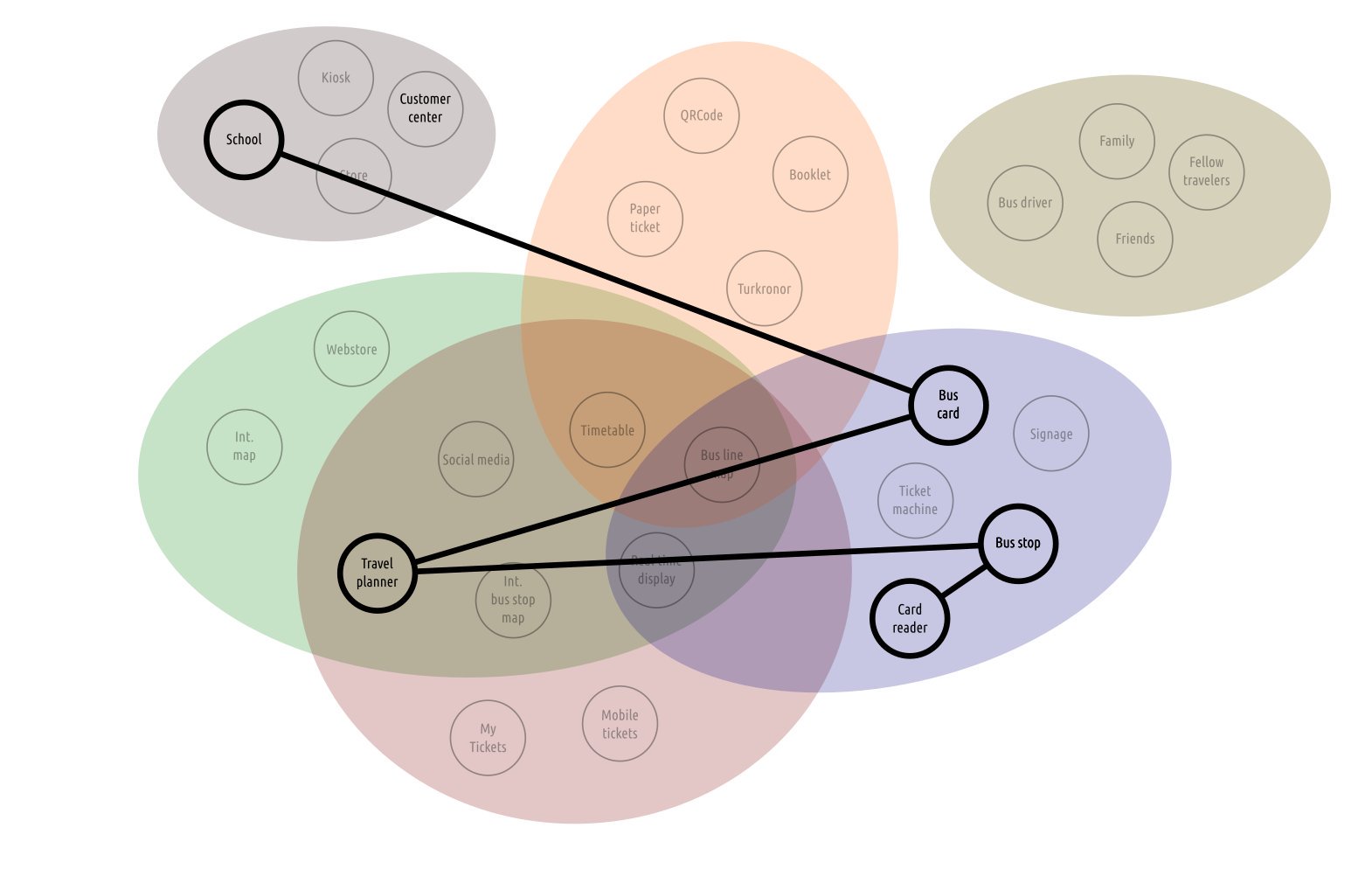

When describing a cross-channel ecosystem, the complex structure that provides the scaffolding to any type of experience (Resmini & Lacerda 2016), we can consider vertices as some particular touchpoints and edges as the paths that lead from a specific touchpoint to another one. Figure 10 shows the synthetic view of a part of an experience, traveling home from school by bus, within a transportation ecosystem that uses this specific edges and vertices syntax. Touchpoints, identified by the actors using the transportation system and here represented by circles, are linked together by means of black lines to describe an actor’s actual path through the ecosystem. The colored blobs represent channels: broad, contextual, non-exclusive categories that can be used to describe semantic proximity between touchpoints.

Figure 10. Channels and an actor’s path along touchpoints in a transportation ecosystem (Source: A. Resmini)

Since a graph represents relationships, identifying what is related or can be related and what is not or can not is a fundamental task. If we consider the purview of information architecture, there is definitely a “before the internet” and an “after the internet” when it comes to how concepts or objects can be in a relationship with one another (Weinberger 2007; Resmini & Rosati 2009). Before, establishing relationships between objects required planning long-term, structured efforts and modifying existing relationships was generally a difficult, slow-going activity.

Mainstream access to the internet has changed all of this: any object can be connected, that is to say that any object can be related to another through an interlocking network structure. Infinite possible vertices wait to be connected through infinite possible edges.

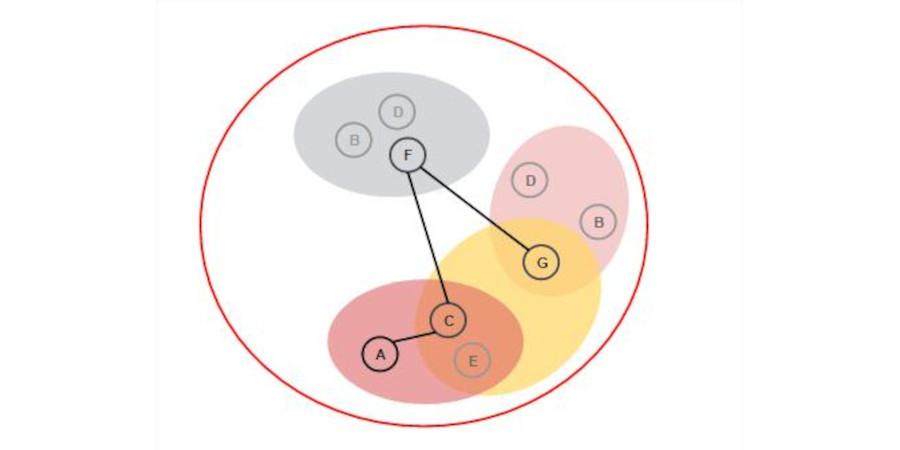

A piece of music can be represented as a graph, too. If we use the same visual language used in Fig. 10, the vertices in bold represent the notes of a simple melody, the edges stand for how the notes connect to each other in a sequence, and the colored blobs represent the chords to be played simultaneously with specific notes or vertices (fig. 11).

Figure 11. A piece of music represented as a graph

If the red line bounding the image could stand for the key, the only thing we would be missing is a sense of directionality when reading the piece, something that could be accomplished by providing visual indications of where the starting point is.

When we design an information architecture, we essentially define a graph. This graph describes and shapes the architecture that we use to create or facilitate meaningful experiences (Badaloni 2016; Badaloni 2020).

How We Perceive Experience

When we think of our environment as an inhabitable graph, we tend to see what we usually call “things” as vertices. The physician Carlo Rovelli (2017) writes that what characterizes “things” is that they have permanence: we can ask ourselves where they will be tomorrow. Things are not the only elements in the environment though: a substantial part of it is made of “events”, as Rovelli calls them. The archetypal event is a kiss: it exists for a brief moment, and wondering where it will be tomorrow makes little sense. Contemporary physics considers the world to be made of a net of kisses more than of a net of stones.

It could be argued that “stones”, i. e. things or vertices, can also be seen as a specific type of event, the one corresponding to our perception of a given thing, which is true, but it is important to recognize that a different cognitive process sits behind our making sense of them: when we think of things, what we feel through our senses, sight, smell, touch is foregrounded; when we consider an event, emotions, or more precisely our emotional intelligence, a skill that “includes the ability to engage in sophisticated information processing about one’s own and others’ emotions and the ability to use this information as a guide to thinking and behavior” (Mayer et al 2008), take precedence.

For example, consider a group of friends. If we think of friends as “stones”, vertices, we can identify them based on their appearance: the color of their hair, their height, their clothes. But if we think of the “events” that make them “a group of friends”, we will describe the emotional quality or the nature of the particular edge that connects them.

In music, the notes are vertices of a graph that we experience through hearing. But we are aware of the relationships between them through our emotional intelligence. The flowing perception of all vertices and edges, that is to say of the notes and the relationships that bind them, is our experience of the specific architecture of that piece of music. We can extract meaning from it because of our previous exposure to similar types of music, of the social and cultural context in which we grew up, and of our individual understanding of music.

This is not at all dissimilar from what we do when we browse the web: we perceive the individual pages or parts of pages, the vertices of the graphs that we call “websites”, with our eyes, but we make sense of the experience by relying on the edges, the navigational pathways we follow and the semantic relationships between pages, and the emotional imprint that they leave on us.

The core of this argument is that our idea of the meaning of two elements of information depends mostly on the way we experience the edge that connects them and, consequently, the function we assign to that edge in relation to the environment it belongs to (Badaloni 2020).

The Role of Information Architecture in Shaping Experience

We often underestimate the part that information architecture plays in shaping the visible, audible, tangible aspects of phenomena in environments and ecosystems. Music offers a key to fully understand its role in the process, since we can factually hear how a simple twelve-note set can sound radically different if the underlying logic that structures the relationships among the notes is altered.

The rules of tonality have produced masterpieces such as Mozart’s famous Symphony Number 40 in G minor, for example, written in 1788, or the Beatles’ Yesterday, written by Paul McCartney in 165. But until the 17th century, the information architecture of music was totally different from what it is today. It was based on modes, types of melodies made by small groups of notes pivoting around a primary pitch. Gregorian chants are a good example of modal music. They were originally performed by one singer or a group of singers singing the same melody. Then multi-voice elaborations of these melodies became common, marking the beginning of Western polyphony. In this kind of music, chords were mainly a consequence of multiple melodies, or different portions of the same melody, that were played at the same time in a piece. To have an idea of how modal music sounded, one could listen to the Salve Regina motet for four parts written by Josquin Desprez, a French composer born in the mid 15th century.

Both Desprez and Mozart were in a privileged position: they could compose their pieces using the existing musical information architectures of modality and tonality, respectively. Then, in the beginning of the 20th century, composers stretched tonality to its limits, started exploring new architectures, and began producing a new type of music.

Some of them, such as German composer Arnold Schönberg, created their own original musical information architecture and used it for the remainder of their production. Others, such as Japanese Toru Takemitsu, Hungarian György Ligeti, and Italians Goffredo Petrassi, Luciano Berio and Ennio Morricone (this latter especially for what concerns his ‘absolute’ music), often felt the need to create a specific musical information architecture for each new piece, which might have played a role in their being relatively unprolific if compared to the composers of the past, since devising an entirely new architecture on a piece by piece basis is time consuming.

Schönberg based his music on ordered series made of all the twelve notes. For that reason his musical information architecture is called dodecaphony. Dodecaphony aims at avoiding any perception of tonality, producing some sort of anti- or non-tonality where, for example, any chord-like sound was intentionally avoided. Schönberg’s Suite for Piano, Opus 25, written in 121, is particularly emblematic. Regardless of whether some might consider the Suite and similar pieces hard to listen to, their resulting sense of asperity is not necessarily related to their non-tonal architecture. To explore very different atonal musical experiences, one could for example listen to To the Edge of Dream by Takemitsu, written in 13, and to Kyrie, written by Goffredo Petrassi in 186 for choir and string orchestra. While being both excellent examples of atonal music, they are very different from one another, the reason being their underlying musical information architecture.

The power of information architecture in shaping experiences lies exactly in its being a form of generative logic that structures the possible relationships between the elements of an environment.

Designing Architectures

Just like contemporary composers, designers working with digital often need to create a new, specific information architecture for what they design, one that best serves the purpose of the project. The downside of such an approach is that the information architecture and the affordances it enables and supports are a somewhat new experience for users every time, and require effort on the side of the user as they can not rely, partially or entirely, on previous encounters with similar structures to make sense of the new ones. Sometimes, that unfamiliarity and not making sense dampens our appreciation of them.

For example, the Hungarian composer György Ligeti wrote the eleven piano movements of his composition Musica Ricercata under the self-imposed constraints of using only certain pitch classes in each movement. In the first movement he only used two notes (A and D), in the second three (E♯, F♯, G), proceeding then to add one more note with each new movement. Musica ricercata is a lovely listening experience, if we judge “by ear” only, but it is wonderful when we understand the underlying architecture and can judge it more in full. Gustav Klimt said that “(a)rt is a line around your thoughts”: the line is necessary to make sense of the artwork, but so is our own engagement with the line itself. Naive appreciation, feelings, “liking something”, are all starting and not arrival points. The affordances that allow us to make sense of Ligeti’s Musica ricercata can be discerned after listening to the first two or three movements, but this act of making sense requires dedication: one really has to listen. For this reason, to make our meaning-making easier, some composers provide us with a key to their compositions in the form of their titles, subtitles, or explanatory notes. In contemporary music a composer “designs” the architecture of the composition, the sounds that result from it, and the affordances that will permit the listeners to understand the piece as interlocking part of the same creative activity. These elements all influence each other.

This is incidentally also why Ennio Morricone could write his “musica applicata” for movies so much quicker than his “musica assoluta” pieces: part of the affordances of “applied music” is entrusted to the images. When we see a movie, what we see provides additional clues as to how we should understand the score, and this simplifies the composer’s work.

Harmonic Complexity

In “Understanding Context”, Andrew Hinton writes that “composition means we have to make decisions about what things are, what they mean and how they relate to one another, all coming from an understanding of how people will perceive the environment” (Hinton 2014, p. 342). That is the key to make the acoustic, visual, tactile environments we design coherent and functional across the different media and contexts, both digital and physical, that users encounter.

“Composition” is a word that crafts as different as architecture, painting, and of course music share: the arrangement of the parts so that the whole is meaningful and beautiful. A well-composed whole blends simplicity and gracefulness into elegance, from the latin verb “eligere”, “to choose”: and that is what composition ultimately means, choosing how things should be (Hinton 2014), whether in composing a symphony or in designing the architecture of someone’s experiences. When we compose, the structural clarity of the underlying architecture, the meaningful set of relationships between its vertices and edges, is the foundation for any “good” experience.

Humans share a love of beauty, and what is beauty if not someone’s judgment that an experience is graceful, harmonic, meaningful? Grace is effortlessness, gracefulness the prerequisite for harmony, and harmony that concurrence of the parts that makes us understand the whole as a meaningful organism.

In information architecture as in music, the elegance of a complex design, be it an experience, a fugue, an atonal piece, arises from the harmonic combinations of the parts, of the individual moments, of the simple melodies chasing one another. Everything else is just noise.

References

- Badaloni, F. (2016) Architettura della comunicazione: progettare i nuovi ecosistemi dell’informazione. Amazon.

- Badaloni, F. (2020) Progettazione Funzionale: design collaborativo per prodotti e servizi digitali. Amazon.

- Bendía, M. (2016) Ennio Morricone: La Musica Assoluta. Justbaked. https://www.justbaked.it/2016/12/06/ennio-morricone-la-musica-assoluta/

- Garrett, J. J. (2009) The Memphis Plenary. http://jjg.net/ia/memphis.

- Garrett, J. J. (2016) The Seven Sisters. https://medium.com/@jjg/the-seven-sisters-c2a7c4c0d0.

- Hinton, A. (2014) Understanding Context. O’Reilly Media.

- Hobbs, J. (2014) The design behind the design behind the design. 15th ASIS&T Information Architecture Summit. https://humanexperiencedesign.net/D3_JHobbs.pdf

- Locanto, M. (2007) Armonia come simmetria. Rapporti fra teoria musicale, tecnica compositiva e pensiero scientifico. In Borio, G. & Gentili, C. (eds) Armonia, Tempo (Storia dei concetti musicali, 1). Pp. 1-246. Carocci.

- Mayer, J. D., Salovey P., & Caruso, D. R. (2008). Emotional Intelligence: New Ability or Eclectic Traits? American Psychologist. Vol. 63. No. 6. Pp. 503–517.

- Lucci, G. (2007) Morricone: Cinema and more. Mondadori Electa.

- Moreno, J. L. (1934) Who Shall Survive? Nervous and Mental Disease Publishing Co.

- Penbrooke, R. (2013) Storyline Data Model: Sharing the ontology for BBC News. BBC. https://www.bbc.co.uk/blogs/internet/entries/8dd3f2-632-371b-31c-7a13fbf1bacf.

- Resmini, A. (2012) Information Architecture in the Age of Complexity. Bulletin of the American Society for Information Science and Technology. Vol. 3. No. 1.

- Resmini, A. & Rosati, L. (2009) Information architecture for ubiquitous ecologies. Proceedings of MEDES ‘09, the International Conference on Management of Emergent Digital Ecosystems. No. 2. https://doi.org/10.1145/1643823.164385.

- Resmini, A. & Lacerda, F. (2016) The Architecture of Cross-channel Ecosystems. Proceedings of MEDES ‘16, the International Conference on Management of Emergent Digital Ecosystems. Pp. 17–21. https://doi.org/10.1145/3012071.3012087.

- Rovelli, C (2017) L’ordine del tempo. Adelphi.

- Weinberger, D. (2007) Everything is Miscellaneous. Times Books.

- Wurman, R. S. (2001) Information Anxiety 2. Que.